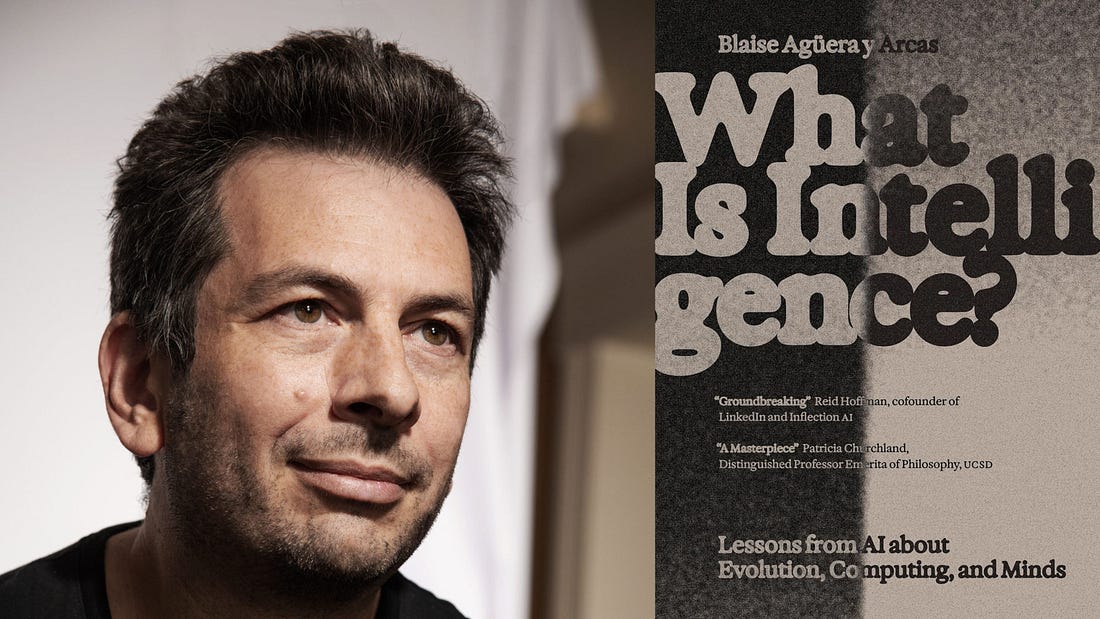

🧠 Neural Dispatch: Google’s silently leading cancer research, and decoding AI’s intersectionsThe biggest AI developments, decoded. October 22, 2025.Hello! Cognitive warmup. Among the frontier AI players, Google remains the only one that truly owns the entire stack — from the Gemini family of models and massive proprietary datasets, to its cloud infrastructure and custom tensor processing units (TPUs). But what really sets it apart is the research-first instinct that never went out of fashion in Mountain View. While others love grandstanding about “solving world hunger” or “curing cancer,” Google quietly went ahead and started doing the work. Its latest milestone, developed in collaboration with Yale University, is Cell2Sentence-Scale 27B (C2S-Scale), a new 27 billion parameter foundation model designed to decode the language of individual cells. It’s not hyperbole to call this a landmark — in AI for science, and in cancer research itself. The C2S-Scale 27B model was tasked with identifying a drug that could act as a conditional amplifier, boosting immune signals in specific cellular environments. The results were staggering: “C2S-Scale successfully identified a novel interferon-conditional amplifier, revealing a new pathway to make ‘cold’ tumors ‘hot,’ potentially improving their response to immunotherapy,” the researchers wrote — cautiously calling it “an early first step.” But make no mistake — this is Google at its most dangerous: focused, methodical, and quietly disruptive. While the rest of the AI world is busy hosting panels and pitching visions of utopia, Google’s lab lights are still on, deep in the night, teaching machines to understand biology itself. And that should make every other AI company a little nervous — once they’re done counting their valuations and clapping in their own echo chambers. THINKINGLate last week, I had one of those rare opportunities that few in global tech journalism have enjoyed so far — a long, uninterrupted conversation with a man who wears many hats, and arguably, uses much more of his brain than most of us do. He joined us from the US, having skipped dinner to make time for the chat. (Not that it worried him — he calls himself a night owl, which means the meal was just postponed, not missed.) That man is Blaise Agüera y Arcas, a name that sits comfortably at the intersection of art, science, and intelligence itself. He is Vice President, Fellow, and Chief Technology Officer (CTO) of Technology & Society at Google, where he leads the Paradigms of Intelligence (Pi) team — a group tasked with exploring the boundaries of what artificial intelligence can become. And in our conversation came a statement that lingers: “We already have AGI.” A verdict as calm as it was seismic, and a striking departure from the industry’s endless obsession with when artificial general intelligence might arrive. And why. Read: AGI isn’t coming, it is already here: Google’s Blaise Aguera y Arcas The conversation with Agüera y Arcas spanned a lot more, some of which I’ve covered here in this week’s Neural Dispatch. The reason is simple — it wouldn’t harm to listen to a voice of reason in an era when AI bosses seem to have more a funding and valuation obsession, alongside a fixation with targets and an expansive future without exactly a solid foundation for any of that. The electricity consumption targets, an example, something we’d chatted about last week. Edited excerpts, for the conversation around creativity and AI, touching upon empathy and self-reflection, as well as principles of interaction. → Definition of creativity in a time when generative AI is defining social media feeds and when AI itself has become a creator.Blaise Agüera y Arcas: A few years ago, I wrote an essay called Art in the Age of Machine Intelligence. This was really at the very beginning of generative models we knew as well before we had LLMs, but we started to have models that could render images. They weren’t realistic yet, but were really interesting. It was the era of deep dreams if you remember, where everything was psychedelic. But we could see that space enlarging and enriching. We knew that AI that could generate really convincing media, was coming. That was the basis for us founding the Artists + Machine Intelligence program at Google. I think that defensive reactions, that it erases the need for artists or devalues human creativity, are reductive and pose a false dichotomy. In sort of the same way that when photography was invented in the 19th century, there was an uproar in certain quarters that it supposedly devalued painting. But you know what actually happened of course — it was the golden age of art that followed right after the invention of photography, some of which involved photographers who really leaned into the technology to do things that were difficult to do with painting. This is not to say that there’s not a lot of garbage. In fact, there’s a lot of AI generated slop, for lack of a better word. Then again, human output is not uniformly brilliant either. But I expect that the range of outputs that humans make both with and without AI, and that AI makes, that the whole space is in the process of expanding in ways that I’m very, very curious to see how they play out. → Can AI, despite knowledge and reasoning, simulate empathy, and even self-reflection?Blaise Agüera y Arcas: You use the word emulate, and it’s an important qualifier because it’s a reasonable belief that in order to actually have versus emulating empathy, for instance, you have to have feelings. And now we get into more complex territory than just cognition. When an LLM reads a short story and you ask it, “how does this character feel at this point?”, it answers convincingly. We’ve run a bunch of experiments with LLMs to test for theory of mind, which includes factual and also emotion or how somebody will behave or react in a certain way. And they do a good job of this, which is not surprising because when you pre-train them on lots of human dialogue, our feelings and our emotions are a part of that dialogue. So it understands all of those things. The question of whether it has those feelings itself is, I think, a lot less answerable. It certainly doesn’t have the same chemistry that we do. It’s doing all these computations on a very different platform. Neural Dispatch is your weekly guide to the rapidly evolving landscape of artificial intelligence. Each edition delivers curated insights on breakthrough technologies, practical applications, and strategic implications shaping our digital future. Written and edited by Vishal Mathur. Produced by Shad Hasnain. |